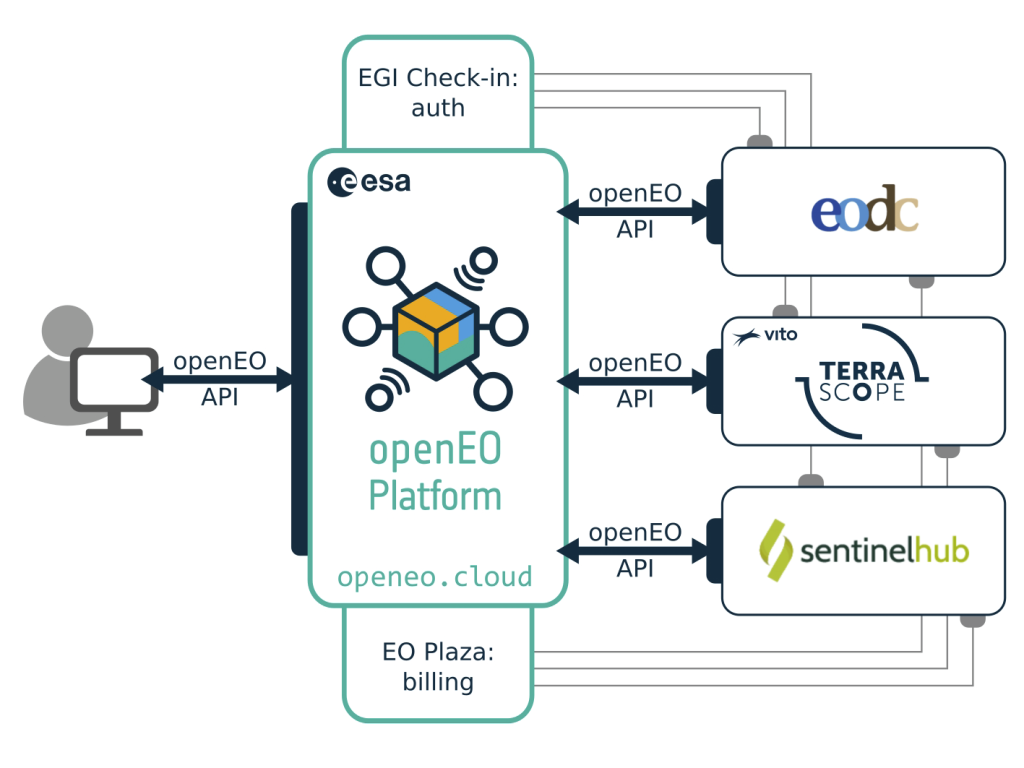

openEO Platform (https://openeo.cloud/) represents a unique platform service based on a federated infrastructure and data offer built on top of openEO. It brings openEO to production and offers data access and data processing services to the EO community. The project was initially funded by ESA and is now offered via different subscription methods, see: https://openeo.cloud/.

openEO Platform builds upon the EO cloud processing platforms managed by VITO, Sinergise, and EODC, and the platform management and software development experience of all partners. openEO platform unites:

- Terrascope, the Belgian processing infrastructure for Copernicus and PROBA-V data,

- Sentinel Hub, the most advanced on-the-fly satellite data processing engine handling more than one hundred million requests every month, and

- EODCs cloud infrastructure and HPC experience,

integrating all of these with the openEO API, a community project that started with H2020 funding and that comes with an ecosystem of user-friendly, graphical, and command-line-based clients. The federated components of openEO Platform can be seen in the following:

To support the users, a comprehensive documentation portal has been established at https://docs.openeo.cloud/. This portal offers everything from getting started guides to Jupyter notebooks that illustrate more advanced use cases. This will simplify and accelerate the initial start with openEO platform and demonstrates its current capabilities. To further facilitate the exchange between users and developers a discussion forum is offered to all registered users, see https://forums.openeo.cloud/.